Photo by Possessed Photography on Unsplash

How to build your own API to detect toxic comment

In this article, you will learn to build an artificial intelligent API to detect toxic comment

Introduction

Toxicity is anything rude, disrespectful, or otherwise likely to make someone leave a discussion. We will use Django Rest Framework to build the API.

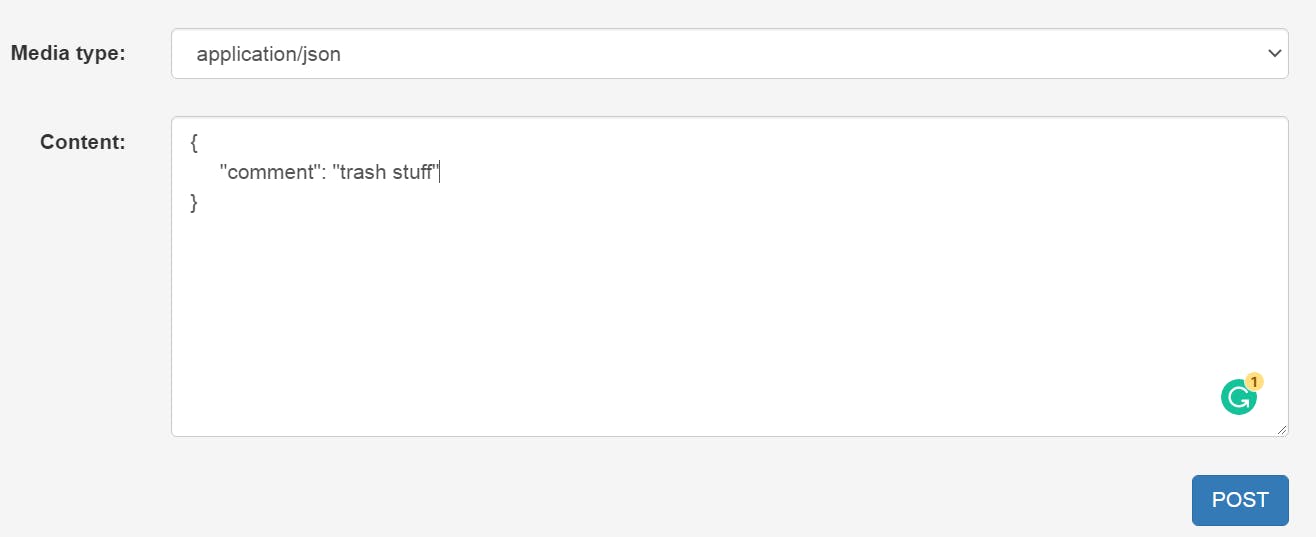

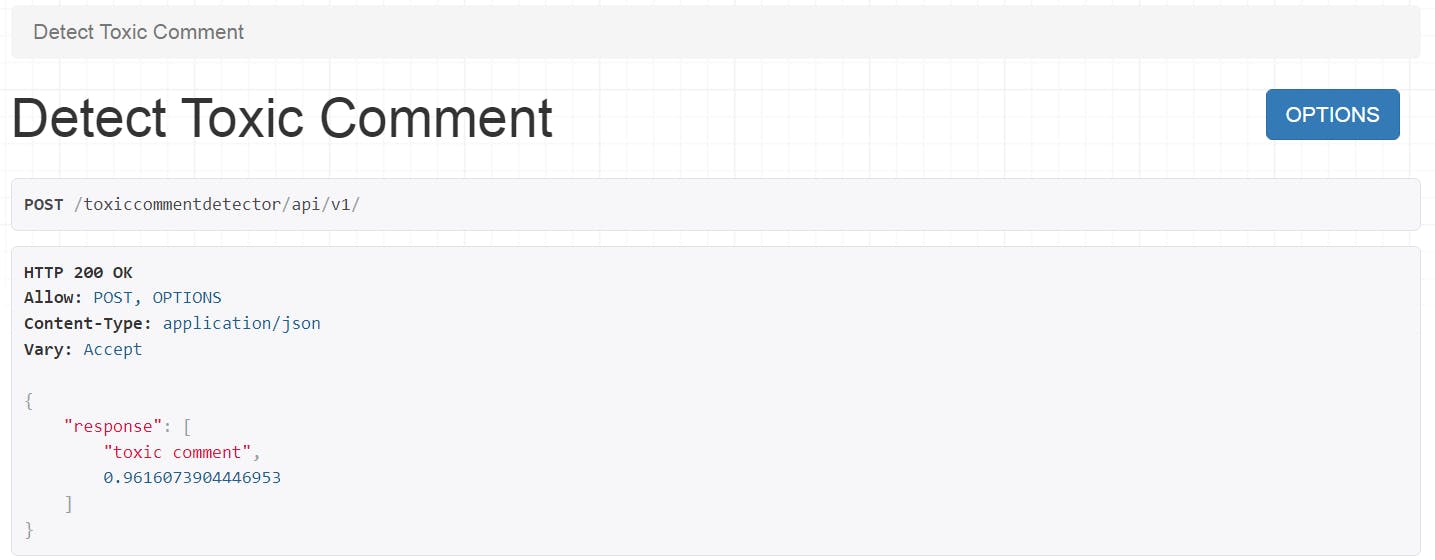

See how the API works:

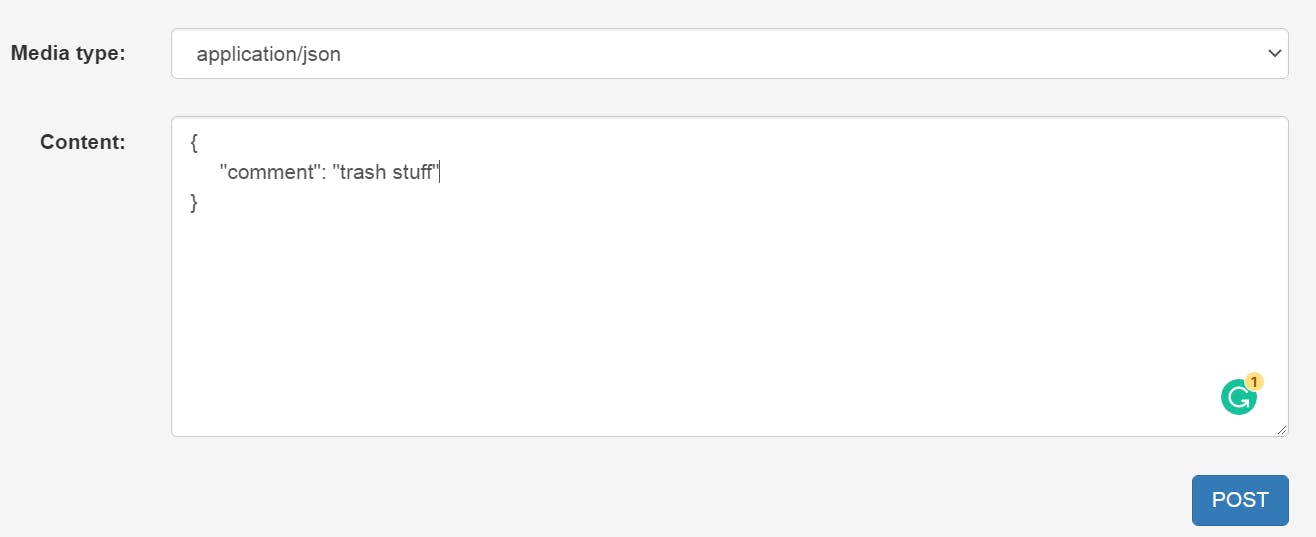

When I enter a comment, for Example: "trash stuff" as seen below:

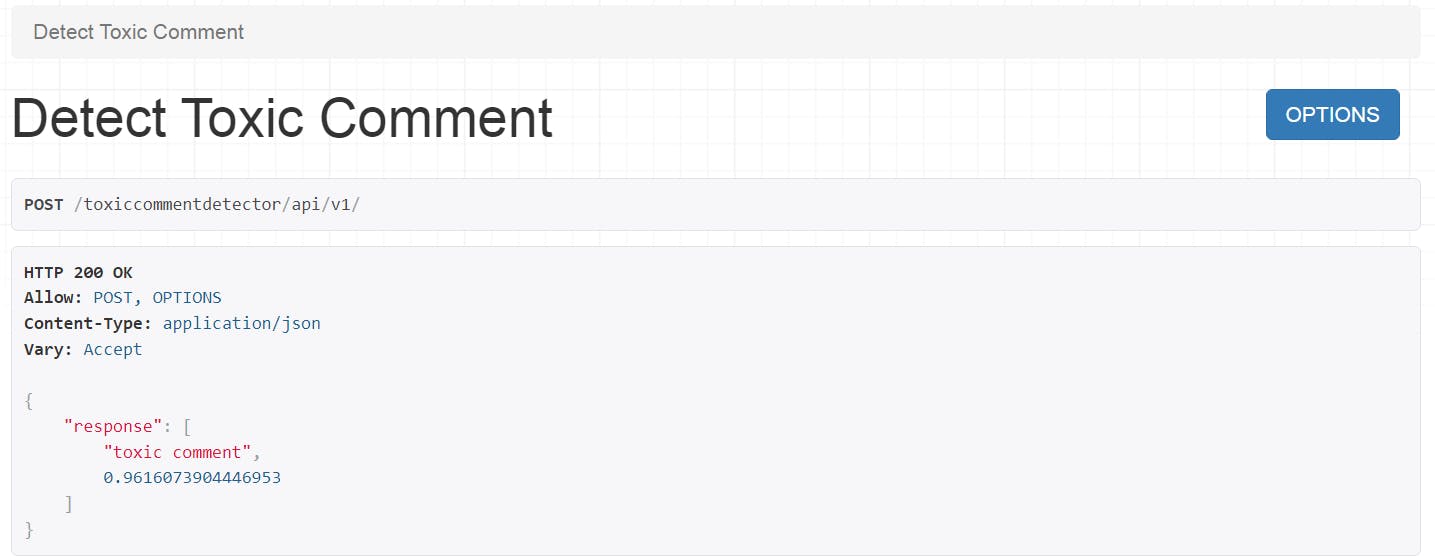

The API will detect whether "trash stuff" is a toxic comment. From the prediction below, "trash stuff" is definitely a toxic comment.

Let's get in and start building

Get model and Vectorizer

Download a trained model and the vectorizer which will help to transform words to numerical vectors from here: https://www.kaggle.com/code/emmamichael101/toxicity-bias-logistic-regression-tfidfvectorizer/data?scriptVersionId=113780035

We are using a Logistic Regression model.

Setup Django Rest Framework project

Create a new folder and open it with your code editor

Run the following command on your terminal to create a new virtual environment called venv

python -m venv myvenv

- Activate the virtual environment

myvenv\Scripts\activate

- Run the following command to install Django and Django Rest Framework

pip install django

pip install djangorestframework

- Create a new Django project called detectorproject and an app called detectorapp

django-admin startproject detectorproject .

python manage.py startapp detectorapp

Open the settings.py file in detectorproject directory and add the detectorapp and rest_framework apps to the list of installed app:

INSTALLED_APPS = [

...

'rest_framework',

'detectorapp',

]

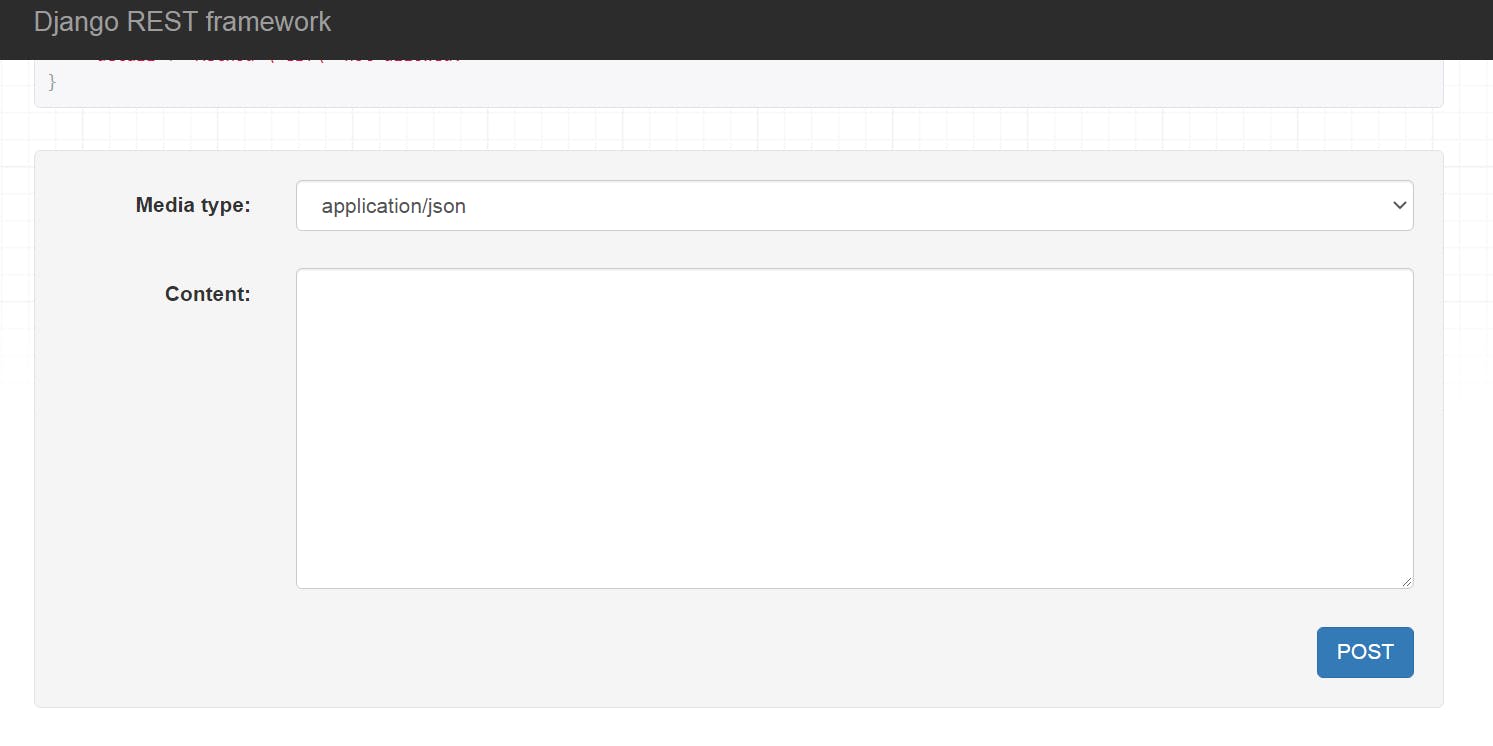

Add model and vectorizer

Add the downloaded model and vectorizer to detectorproject directory/folder directly below manage.py file

services.py

Create a services.py file in detectorapp directory and add the following code:

import pickle

from rest_framework import status

from rest_framework.response import Response

def check_toxicity(comment):

comment_dictionary = {}

comment_dictionary['comment_key'] = [comment]

# load the vectorizer and vectorize comment

vectorizer = pickle.load(open("./Vectorize.pickle", "rb"))

testing = vectorizer.transform(comment_dictionary['comment_key'])

# load the model

with open('./Pickle_LR_Model.pkl', 'rb') as file:

lr_model = pickle.load(file)

# predict toxicity. prediction range from 0.0 to 1.0 (0.0 = non-toxic and 1.0 toxic)

prediction = lr_model.predict_proba(testing)[:, 1]

prediction = float(prediction)

if prediction >= 0.9 and prediction <= 1.0:

response_list = ["toxic comment", prediction]

return Response({"response": response_list}, status=status.HTTP_200_OK)

elif prediction >= 0.0 and prediction <= 0.1:

response_list = ["non toxic comment", prediction]

return Response({"response": response_list}, status=status.HTTP_200_OK)

else:

response_list = ["Manually check this", prediction]

return Response({"response": response_list}, status=status.HTTP_200_OK)

check_toxicity function receives a comment, loads the vectorizer to transform the comment to numerical values, and then passes the numerical values to the model to predict the toxicity of the comment. The model will return any prediction value from 0.0 to 1.0.

Prediction at exactly 0.0 signifies a strongly nontoxic comment while prediction at 1.0 signifies a strongly toxic comment.

For the API, I decided to call predictions greater than or equal to 0.9 and less than or equal to 1.0 toxic comments since the model is pretty sure at these instances, predictions greater than or equal to 0.0 and less than or equal to 0.1 nontoxic comments and regarded every prediction from 0.2 to 0.8 neither toxic nor non-toxic comments.

views.py

In views.py file of detectorapp directory add the following code:

from rest_framework.views import APIView

from .services import check_toxicity

class DetectToxicComment(APIView):

def post(self, request, format=None):

comments = request.data.get('comment')

result = check_toxicity(comments)

return result

We import APIView class from rest_framework.views to help us define a class-based REST framework view

import check_toxicity from services.py file in detectorapp directory.

DetectToxicComment gets comments, calls the check_toxicity function to help predict toxicity, and then returns the result or prediction.

urls.py

- Create a urls.py file in detectorapp directory and add the following code:

from django.urls import path

from . import views

app_name = 'detectorapp'

urlpatterns = [

path('', views.DetectToxicComment.as_view(), name='detect_toxic_comment')

]

- Update urls.py file in detectorproject directory with the following code to include URLs of detectorapp

from django.contrib import admin

from django.urls import path, include

urlpatterns = [

path('admin/', admin.site.urls),

path('toxiccommentdetector/api/v1/', include('detectorapp.urls')),

]

Evaluate API

- Run the following command on the terminal to start the development server

python manage.py runserver

- Go to the following link on your web browser: http://127.0.0.1:8000/toxiccommentdetector/api/v1/ you will see a page as the following:

- Enter a comment in the Content section and click POST. Example:

- You will see a response as the following that "trash stuff" is a toxic comment

Conclusion

Thanks for reading. Get the code here: https://github.com/emmakodes/toxiccommentdetectorapi